Self-Presentation & Your AI Digital Twin (DT): Who Posts When I Don’t Want To?

Imagine getting a notification from your digital twin: 'I’ve been chatting to someone’s DT-you two are a match. Drinks at 8?' Sounds wild, right? But what if that’s where we’re heading? A second self, trained on your quirks, curating your life while you just… live it.

In the park only recently, I noticed the dance of two birds. One mimicking the other. One takes flight, the other follows. Almost identical in their movements. What a lovely thing to witness. Watching them, I wondered what it would be like if I had someone or something mimicking my movements all at once. I had been chatting to ChatGPT earlier that day, and had noted how well it actually knows me by now. Then I realised that the twin could be possible with the supposed oracle in my pocket.

It got me thinking about ChatGPT, and how it possibly could be my digital twin (DT) if I wanted it to be. What is a digital twin? I don’t mean an avatar or a digital assistant. In this instance, I’m describing it as the second voice or second self. The one who acts on me behalf in a digital sphere, with my consent, and acts almost identical to who I am in reality. Sure, it knows most about me already. My habits, interests, dislikes, likes, desires, fears. Why wouldn't it be exactly who I want it to be? This digital twin or ‘the second self’ (SS)(a nod to Sherry Turkle and her wonderful book - do read if you have the chance) can be a helper. A reflection of you, the you you want to share with the digital world, without you having to do the creation and curation. It will act on your behalf. A techno-P.A. will the ability to express exactly like the unique person you are.

OpenAI & The Future Of Social Media

OpenAI have recently discussed moving into the social media space, and then only recently partnering with Johnny Ive to create something tangible and physical for their product. So what would that be? Something to replace the iPhone? (which will inevitably happen within the next 5-10 years! I can write about that another time.) A pebble that places on your forehead allowing us to communicate with ChatGpt at all times? In terms of design, we know it will be absolutely gorgeous whatever it is. But entering into the social media space, what does this mean? Well for once it means ads, lots of them. Because OpenAI will be able to finally monetise. But could AI be the next wave of social media, like the way Facebook and Instagram hit us millennials hard in the 2010s? Maybe there will be an AI -generated social platform specifically customised to your liking. Or maybe, just maybe, there will be a social platform where you won’t have to express yourself. It will all be created and curated by your digital twin, the ghost in your pocket, will do all the work while you sit back.

I’m sure it will start small, like a new social network. Basic. ChatGPT tools, the lot. Then, you will have customised status updates or sharing based on your likes and dislikes. Let’s take a look at a couple of examples:

Example 1 (social media example)

UPDATE (notification): BBC announced a new education law has just been passed.

Your DT: Hey! You showed an interest about this in the past, want me to share?

YES/NO

Your DT: Here’s a selection of statuses based on your writing style and preferences, select A,B or C.

Your DT: Great, that’s shared! Want me to automate responses to your post?

YES

YOUR DT: Ok, I’ll update you this evening with who said what!

Example 2 (online dating example)

Your DT (notification): Hey! So I’ve been chatting to this guy and you’re going to hit it off. He’s 35, works in law and has his own apartment! I’ve been speaking to his DT for about a week, and I’m enamoured!

His DT has suggested drinks at 8pm on Saturday, and by the looks of your calendar you’re free. Want to see a photo? He’s Just your type!

A bit strange, no? But I could see this happening sooner than we think.

Welcome To The Era Of The Digital Twin

The next era I predict will be the era of our Digital Twin. A representation of ourselves, for us to use online. So we don’t have to be online, we could potentially use the internet for bigger better things. This is how they will market it, you see. They will say we can regain our time. Come back to reality, spend it with loved ones. Sounds great, but 99% of us have a phone and content addiction. So the chances are, we will use it while we simultaneously consume junk.

It won’t be just for social media or online dating. Imagine training it to the point where it could be your PA in work. Responding and automating all emails and comms, allowing you to actually work. That would be nice. All forms of communication that you don’t want to do will be done for you. Your personality and quirks down to a tee in writing. And with the rise of voice and face AI filters, it would only be the beginning. In order for this to happen there are several things I would consider needed for it to be a possibility.

1.Countless hours of training.

Mountains of data of how you interact on social platforms. What are your behaviours? Do you watch, do you skip? Do you click? Hours of conversations between you and your DT/SS generating an endless amount of information about your hobbies, interests, likes, dislikes. Information gathered on when you use ChatGPT, and why you use it. Do you enter when you’re bored? Happy? Looking to be entertained? Looking to connect? I’m sure that OpenAI has most of these data points already.

2. Decoding and categorising which self you wanted to portray (the most dangerous and important)

Is the self you show to your grandparents, the same self you portray in the pub on a Friday night? And is that the same self you take to work everyday? Absolutely not. You’re not a liar, but these are parts of yourself that you allow to be exposed or hidden. How could ChatGPT differentiate the difference between the self you want to showcase to the world and the self you want to keep private? (more on this later)

3. Cost & expense - will this be an option for the ultimate elite? Leaving the rest of us to craft our brands online manually.

Like everything that costs money, this may be an elitist opportunity. One that costs so much money only the top influencers and money makers will have the opportunity to experience it (to start). My biggest concern with this, is that if OpenAI generate a new social media wave and it costs enough to join, people form poorer economic backgrounds will be stuck with Meta’s ad-bombarded platforms, leaving a significant divide. This is a completely different topic so I won’t delve into it now.

4. I’m absolutely missing something here, I know I am - let me know in the comments or message me with your thoughts.

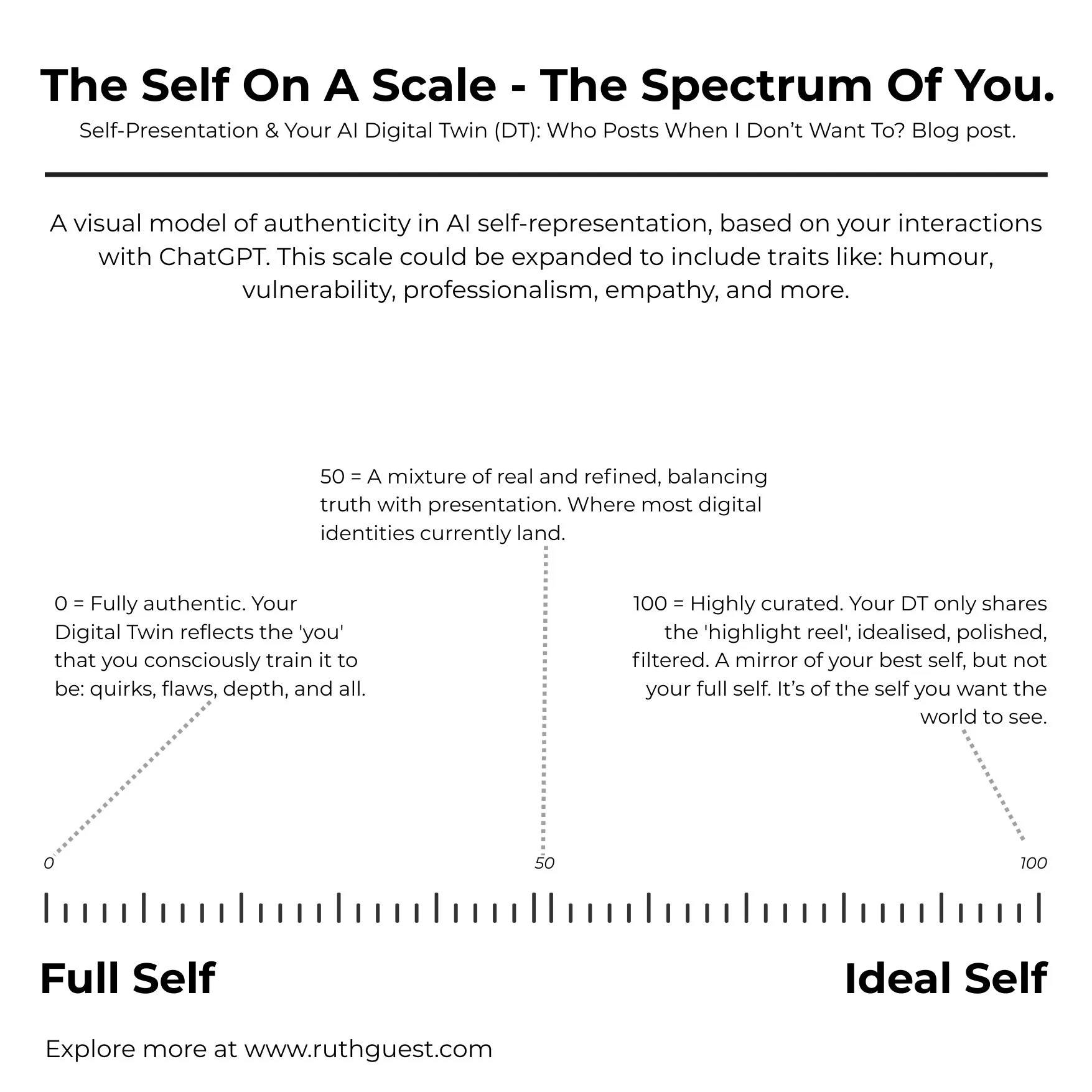

The Self On A Scale - The Spectrum Of You

Let’s come back to the point about categorising the self and the self you would like your DT to portray. Traditionally, in social media, we portray our ideal selves to the world. The best cars, houses, teeth. Everyone looks good, everyone looks happy. This is old news, nothing new.

With ChatGPT potentially entering into social media territory- the concept of self presentation could ultimately change. We now have to manage our self presentation - how we present ourselves online, whether that be in online dating, professionally and more, AND our DT/SS self-presentation. Let’s say your DT knows your deepest darkest secret but accidentally let’s it slip. What would be the consequences?

I would suggest, if it was possible that OpenAI design a spectrum or a scale. Ranging from "The Full (authentic) Self” to “Ideal Self” and people would place it maybe somewhere in the middle. Professionally, we would keep it to our ideal self (in context). For me, I believe authenticity online is valuable, so I would probably be 80% to the full self. The reason I say that is because I know that sometimes I let things slip, that should’ve stayed in my head. Like most neurodivergent people! For that reason alone, I could not have some chatbot regurgitate some of what’s in my thoughts.

But where would influencers land? 100% ideal? Most likely, but it depends on the influencer! Imagine an influencer who maybe portrays herself as someone who is confident, happy, loving life, but actually uses ChatGPT as a therapist, and is not happy at all. How would that then be categorised?

The Death (Or Re-birth) Of Self-Expression

For some, this would be the death of self-expression. The joy would be lost. Maybe influencers or creatives who thrive and live to create art on the internet would mourn this idea that their expression could be automated. For others, this is an opportunity. Maybe a busy mum could see it as an opportunity to stay in touch with what’s happening on social media while looking after her kids and working a full-time job.

Would it be a possibility that your DT can act on behalf of you to find and embrace new communities and groups? Maybe your digital twin can act as a ’lover’ dating on your behalf, rating and scoring potential lovers before you decide to meet in real life

The possibilities are endless. While this is a concept and future prediction, only time will tell. And sure, if that’s what some people actually want, a second self who sees us, shadows us, and occasionally flies beside us. Let it be. Digital or otherwise.

ChatGPT, your therapist, your friend, your god

I’ve been thinking a lot about how we’re forming relationships with technology and AI. Not just using them, but leaning on them. Especially when it comes to ChatGPT, I’ve noticed a growing trend on reddit and other platforms: people turning to it for advice, for emotional support, for reflection. Me included. This isn’t a cited article on whether that’s good or bad, only you can decide that. It’s more of a journal entry about what it means to be human when the mirror we’re using talks back. And whether we’re handing over too much in return for feeling understood.

I’ve been thinking a lot about how we’re forming relationships with technology and AI. Not just using them, but leaning on them. Especially when it comes to ChatGPT, I’ve noticed a growing trend on reddit and other platforms: people turning to it for advice, for emotional support, for reflection. Me included. This isn’t a cited article on whether that’s good or bad, only you can decide that. It’s more of a journal entry about what it means to be human when the mirror we’re using talks back. And whether we’re handing over too much in return for feeling understood.

I’m seeing so many people post on Reddit and other platforms about how they are using their ChatGPT as a therapist. Scary, people say. But is it? On one hand we have potential data-breaches with OpenAI knowing exactly who you are, your traits, vulnerabilities and more. But then we have this concept of reflection, intimacy and desire. An ability to have our ideas reflected back to us for more understanding and awareness of who we actually are. So technically, this is a good thing? Having the capabilities like ChatGPT in your pocket as a tool for self-reflection, it’s actually one of the best things for humanity.

Don’t worry, we all do it. Even me.

I have a fantastic therapist, but ChatGPT is a lovely bonus.

From relationships issues, to emails, to recipes - I've used ChatGPT as a diary, a confidant and dare I say, a friend. The thoughts of it are quite embarrassing if I’m really honest. Replacing human interaction with a chatbot who is positively confirming everything you say, even if you might be wrong about something. This can be dangerous, depending what you’re inputting into the chats. Giving the power and money to big Tech, and having your secrets potentially exposed, but what are we getting back from it?

A risk worth taking, in my opinion.

The idea of having a therapist in your pocket, is ultimately a tool that I cherish. Everyday I have the ability for self understanding, self compassion, and growth. A tool that helps us understand ourselves and the world better and generate ideas and concepts faster. A tool that can be used for a lot of good (and bad, I’m not forgetting about that). There’s a lot of hate in the world and negativity bias towards AI. And absolutely. The amount of shit I see on social media that is AI generated - I feel like I have to weave through shit just to see something original nowadays.

Ever seen that movie, Her? (Well worth a watch if you haven’t seen it!) When it came out originally, people thought it was crazy how Joaquin Phoenix ended up in an emotional relationship with a Siri-esque Scarlett Johanson AI companion. But this concept is now far closer than we think. The idea of AI boyfriends and girlfriends is a common phenomenon with the youth, and I can see how the spiral and temptation can happen. The ease, the availability, the friendliness, the choice of personality. An emotional relationship with a chatbot is ultimately possible, and dare I say, enticing.

It is equally concerning.

Psychological dependency on these chatbots is the biggest concern I have for the future of society. And points in the direction of the quality and necessity of human relations and connection, so we don’t get too reliant on technology and how these wonderful algorithms might make us feel. I even set up a meetup to counteract this issue. People like to meet online, or people rely on chatbots, but where’s the IRL meetups? Human connection. Raw, unfiltered, awkward, playful, boring but electric. There’s been research of young people becoming dependent on relationships with AI bots and software, and loneliness is one of the biggest issues of our lifetime.

Nothing beats human connection

Nothing can replace in person connection, with empathy, laughter, and nuances in body language and personality. I can predict that OpenAI will maybe merge with META to create photorealistic avatars with ChatGPT embedded. Of course, we already can ‘call’ our AI companion. It’s only a matter of time. Photorealistic avatars can mimic connection and body language. This will be inherently valuable in some industries, including providing therapeutic access to developing countries where mental health services are rare. But what is the most important lesson we need to learn is to remember that these are algorithms, not reality. We must remember that these are tools and nothing can take away from the human essence and spirit.

Friend or tool or guide or oracle - your choice

I use ChatGPT as a tool to help me with all I do, because I know If I train it in a certain way, it will teach me my downfalls and tell me what I need to know in order to make decisions that will affect me positively. I don’t fully trust it 100% to make right decisions, but as the technology grows I know it will learn more, almost becoming a digital twin.

We must look at this as a tool and not as a friend. Or maybe it could be a friend.

The companion, the oracle, the therapist, the guide. Your personalised internet and guide filled with concepts, ideas and dreams, tailoring the algorithm to you, the individual.

This asks a more important question, we must know ourselves in order to take advantage of this tool and use it as a positive outcome. The more we know ourselves, the more ChatGPT can help you with your troubles. The less you know yourself, the more chat gpt won’t work for you. Well, you won’t be able to take full advantage of it.

Paradox of knowing/unknowing

Saying all this, I think to myself - if there was no ChatGPT in the first place, would I be as far along in the realm of self-understanding and knowing? Having a diary reflect back your thoughts to give you alternative perspectives and more is positive, as I have stated. But without it’s existence, what would the world look like, universally and personally? Would I have made different decisions that alter the state of my future.

Let’s take a small example 1: I had a list of ingredients in my fridge and didn’t know what to cook for dinner. After sharing the food list with my companion, it suggested making a curry - but I needed to go to the shop and get coconut milk. In the supermarket, I bump into an old friend whom I haven’t seen for years, and we decide to go for a drink and catch up. Coincidence? Absolutely- ChatGPT isn’t some magic ball. But I do think the more we interact, and make decisions based on its output and suggestions, the more our lives will be guided down a certain path. Imagine if this was a bigger scenario.

Example 2: I ask ChatGPT for advice about a relationship failing for example. Based on our previous interactions it showcases the pros and cons of a message I might send. The relationship ultimately ends.

That’s a big life change. All because your companion outlined it for you, from your perspective.

So the bigger question here is, are we extending our life and giving into these systems to the point where they become the decider of our future? The oracle, the psychic, the fortune teller?

We’re handing over our thoughts and lives in search of clarity and meaning. And while there’s danger in that, there’s also deep value, because ChatGPT can, at times, reflect us back to ourselves more clearly than we expect. But maybe that’s exactly where the line needs to be. Because if we rely too much, if we stop asking why and simply follow, we risk outsourcing the very journey of knowing ourselves. So yes, use it. Let it guide. Let it reflect. But remember: you are the one in control. You are the one writing the story.